- Jamwise

- Posts

- Jamwise #17 - Music Recommendation Algorithms: How They Work and What We Can Learn From Them

Jamwise #17 - Music Recommendation Algorithms: How They Work and What We Can Learn From Them

Plus: Bill Withers. Harry Styles, Led Zeppelin, Pink Floyd, The Roots, Simon and Garfunkel

First, to any who might have noticed, apologies for the newsletter slowly and silently slipping from Tuesday to Thursday mornings. I’d blame this on some higher purpose like groundbreaking data that releasing on Thursdays is better for your mental health or something, but the truth is I’ve been writing with all the speed of a fourth grader with a dull crayon. Plus this week’s article needed a little more brain-baking time.

This week’s post was inspired by a super interesting paper I stumbled across about music recommendation engines and how they work.

Now, I’m no academic, but I do have an engineering and data analytics background, so I’m just dumb enough to pretend I understand parts of this paper. Despite the technical details that went way over my head, I actually found it extremely interesting in what it reveals about the thought processes behind the algorithms that drive what we listen to. It’s both an endorsement for what the authors call “content-driven recommendation systems” and a review of the approaches and challenges faced in the field of music recommendation systems, and I think we can learn a lot from it as music fans (and occasional recommenders).

Here’s the link to the article, which I reference throughout this post, to save all the footnotes and such: https://www.sciencedirect.com/science/article/pii/S1574013724000029.

You might be asking yourself, why would my occasionally anti-algorithm newsletter be diving so deeply into the way the enemy’s mind works? Well first of all, it’s pretty fascinating, and second I think that knowing how music recommendation systems work can help us as listeners understand our tastes and the choices we make a little better. Just like I wrote before about music critics and how we should take their opinions with a grain of salt, we should also use recommendation algorithms wisely - that includes knowing when we’re being exposed to an algorithm, and also having a general awareness of how that algorithm works and what its goals or biases are.

So now, with open minds and open eyes, we’re going to enter the mind of the beast.

The paper, from Computer Science Review (CSR, for short) was essentially a review of all the different research projects that are focused on music recommendation systems. I won’t go into everything, and I certainly won’t get into the technical portions that are far out of my scope of understanding, but there were some really interesting points and trends that we should keep an eye on as music fans.

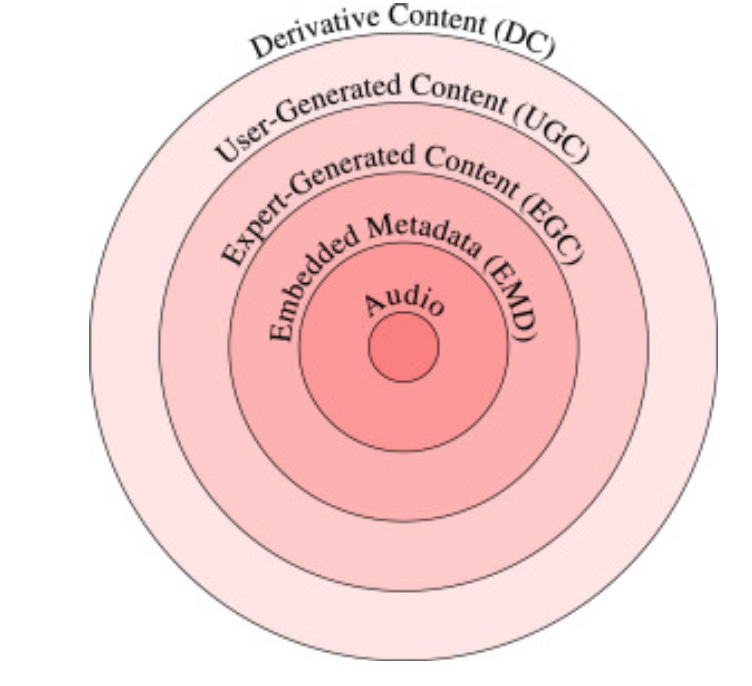

There are a million ways to describe a piece of music. Think about all the things you could say if I asked you to describe “Hotel California” by the Eagles. You could tell me the name of the album and artist, the year it was released, the names of the band members - that’s what an algorithm-maker would call “metadata.” the facts and other undisputable stuff about the song. Or you might tell me about the 12-string guitar riff, Don Henley’s raspy vocals, the vibe that evokes a rehab clinic that everybody’s trying to escape - a programmer might call that audio information. Or you might tell me critics loved the album, or all your friends loved the album - that would be Expert- or User-generated content related to the song.

I guess you could also tell me you hate the Eagles, but I highly doubt I’ll hear you.

Now imagine you had to make a rating system that combined all of those different kinds of data. It has to work for everybody, for every song, for every kind of taste (even Eagles-haters). Not to mention that everybody has a different mix of factors that matter to them, and everybody also rates different aspects of the song differently. Oh yeah, and I forgot to mention you also work for a multi-national for-profit corporation that makes its money based on how long people keep listening to the songs you recommend, not on how often they discover new favorite songs. That’s the challenge facing the developers of music recommendation systems.

(Side note: part of why I liked this article was the fact that all of these challenges are also faced by music critics and recommenders who aren’t code jockeys. Picking music for other people is tricky business.)

You might call the quest to create such a system a fool’s errand. But there are a lot of smart people out there working on ways to better understand all of those traits of music and more besides.

The CSR article defines a framework for these musical traits that most recommendation systems use to some degree:

Image Credit: Computer Science Review via ScienceDIrect

These categories might look familiar. The point the authors are making is that, if we want to have recommendation systems that can’t be as easily gamed by corporations or tastemakers, the central aspect of music recommendations should be the music itself, and everything else surrounding the music is less and less directly relevant as we go outward in the circle (they call it the “onion model”). The other interesting thing is that the farther out in the model you go, the more opinionated the factors become, which means that they are valued less than the music itself. At least in theory.

This isn’t how all algos work, but it’s a pretty intuitive way to understand the way they have to prioritize different musical traits. The way I think about it is that the traits at the center of the onion are usually most likely to make me like a song, and those at the outside are normally the least - the Audio, aka the way the song sounds, is number 1, while Derivative Content (e.g. Weird Al covers) are probably the last reason I’d like a new song. OK, maybe Weird Al was a bad example - but you get the idea. It’s not a perfect model in 100% of cases, but it’s pretty close in 90% of cases, and I’ll bet most streaming services would take that.

(Side note 2: I wonder if Weird Al, as in Weird Alfred, knows his name kinda looks like Weird AI, as in Weird Artiicial Intelligence)

There were six main challenges or goals the authors presented that music algorithm makers are working on. These challenges can be understood as different ways of combining the different layers of the onion model above - weighting the traits differently, defining them differently, and even calculating them differently behind the scenes in computer land. I’d like to spotlight a few challenges that were particularly interesting.

Increasing recommendation diversity and novelty

This might seem obvious - of course this is what we all hope the geniuses at the algorithm factories are working on. Give us better recommendations! Make every song in my Discover Weekly a banger! And do it now!

But there’s an interesting quote about the risk of over-optimizing the recommendation engine that resonates deeply with me: “ these approaches do not allow users to explore and understand their musical taste and increase the risk of trapping users in a self-strengthening cycle of opinions without motivating them to discover alternative genres or perspectives.”

Boom. The researchers absolutely nailed it. This is 10000% what happened to my Spotify that prompted me to start this project in the first place. An echo chamber of brooding dad rock that was created by me liking a few brooding dad rock songs. This is classic over-engineering, and it’s encouraging that the engineers involved in this paper recognize this risk. Finding a way to avoid over-optimizing, however, is the key - common approaches seem to be combining the musical similarity metrics with other things like social metrics, aka which of your friends liked the song, and probably some randomness to encourage new recs as well.

This is the challenge of recommending more diverse music. There are incentives for the algorithm to keep people listening longer, and introducing new music into the mix might not always hit right and keep people engaged. However, there are also powerful incentives to actually recommend new music like the demands of fans and artists. And how do you define “music recommendation diversity?” Does that entail new music within a user’s preferred genre? Does it include totally new genres as well? Does it mean recommending across gender, race, and other sociological lines? The answer is probably yes to each, but when a computer program has to pick just 1 song to play next, it has to at some point choose some of these diversity factors to prioritize over others. And it’s the humans making the programs who set the rules for which kinds of diversity or novelty are prioritized in certain situations - quite a challenge, indeed.

Another good quote: “Another research direction for breaking the filter bubble is to increase users’ awareness of their consumption patterns.” This makes perfect sense to me, and I hope it happens. Being aware of my own music consumption patterns is the reason I started Jamwise, and I think everyone who enjoys music would benefit greatly from knowing why they like certain songs. What if Spotify could explain what music makes me happy, or sad, or just sounds the best according to my taste profile? And what if they were willing to share that data with me in the name of educating me to my own likes and dislikes? That would be amazing for me as a consumer, even if it is a pipe dream.

“Providing transparency and explanation”

You can interpret this aspect of the algorithms in many ways. Many would say the transparency of how you use users’ data is most important, and I won’t beat that dead horse here. But an interesting point is that the user can benefit by understanding how and why the algorithm recommended a song. Hey, that’s the whole reason I read this paper and wrote this article, woohoo!

It’s a nice thought that the algorithms would explain themselves when they recommend a song. That’s already kind of happening in some ways, like the playlists Spotify suggests “because you listened to…” and the creepy-ass DJ feature they added a while back that introduces a set of a few songs it “hand picked” from playlists I made myself.

It would be nice as a listener to see even more deeply into why a song was recommended. But I have a suspicion that’s too much revealing for the big companies to be comfortable with, and even if the engineers find good ways to trace why an algorithm recommends a song, we as listeners might never be privy to that information.

So what do you think? If we’re doomed to a world controlled by algorithms, are the current trends in the music recommendation algorithm world serving us as listeners? Or are there other ways the algorithms should work instead?

Personally, I think the goals presented are good, but I have my doubts that we’ll see algorithms performing in the way the authors describe due to conflicting interest of the profit-driven companies paying to implement the algorithms. True, having an algorithm that can provide perfect recommendations all the time, with just the right mix of new songs, would be a boon to Spotify et al. And certainly improving the quality of recommendations would seem to give the streaming companies of all forms a boost. But the issue here is that, as the authors describe, there are a million ways to define “improvement.” Do novel recommendations really increase listening minutes? Does a perfect explanation of the algorithm’s choices really make the listener more inclined to listen longer? And how do any of these sorting methods

In the end, I think the best outcome of reading the paper has been reflecting on what makes me, a music fan, love music. There are many dimensions to liking a song, and even more to liking an artist. Do I think a computer program can calculate and categorize all of the ways I love music? No, not really. But I think there’s a time and a place for such automated recommendations, even for the most die-hard anti-algorithm analog music lovers, and I’m encouraged by knowing that the algorithm makers are considering listeners’ needs first and foremost, even if the big streaming companies’ algorithms are ultimately not so optimized for music fans.

In a previous life, I was an engineer automating a manufacturing line. I learned a lot about automation and its drawbacks, as well as its benefits. Music recommendation algorithms are the same in many ways - they’re trying to automate a process of selecting music, and to automate anything, you have to break it down into a series of simple, foolproof tasks that a machine can do on its own. That works better for some kinds of tasks than for others.

On the one hand, automating that process makes sense because of the sheer amount of data that’s out there about music. Millions of songs, billions of words written about those songs, and exponentially more data about who listens to those songs: that’s impossible for a human to sort through. So if we want to sort or recommend music using all that data, automation is the only real choice.

But there’s a key assumption baked into that approach - that the data we can quantify into 1’s and 0’s is the right data to use for music selection. Can you really name a time when you decided you loved a song because it had a high “dancability quotient?” Or because its aggregated critical rating was higher than 4.5? I’m making these criteria up, but the point is that the human brain processes music in a far more nuanced way than can be summarized in numbers. If you’re looking for a deep, thought-out recommendation, you’re really asking for far more data than a computer can handle - data a human mind can intuit in milliseconds. You’d need a complete model of everyone’s individual brain to really create a flawless recommendation, and if we get to that point, we might have bigger concerns as a society than what to listen to for our backyard barbecue party.

The advances of the music algorithms feel like they’re nearing a critical limitation - they can only model a relatively small percentage of the variables that make up a human being, and those variables have infinite combinations and interpretations. The algorithms will always be a one-size-fits-all solution in a music industry where, by definition, there’s no such thing. Maybe there will one day be totally personalized algorithms that each user can tweak and control and make their own, but until someone finds a way to make money on such a non-scalable idea, I won’t hold my breath.

The machines can have their shallow, unthinking recommendations. Let them do the busy work of sorting and filing our music, the grunt work that humans don’t have the time to do. As a music fan, that’s a task that I need done, to save me time and get me a shortlist of good music faster.

But when it comes to the deeper, personal music recommendations, I don’t see a reason for algorithms to take over.

So even if I let the algorithms into my musical life in some ways, I’ll keep seeking music in places where I know there’s no engineer trying to reduce art to 1’s and 0’s - the minds of other humans.

Project B.A.E. - Best Albums Ever

Keeping it short this week since I’ve already nerded you half to death.

Just As I Am - Bill Withers

Bill Withers’ debut album is only familiar to me because of the mega-hit “Ain’t No Sunshine”. At number 304 on the Rolling Stone list, that means there must be some other gems here, and based on the Spotify listen counts it seems like I’m not alone in only really knowing one song from this album. Bill’s voice is so soulful and emotional - it carries through the whole album and gives it the same folk-bluesy-soul character we all know well from “Ain’t No Sunshine.”

Brain Rating: 9

Taste Rating: 6

Jams

“Ain’t No Sunshine”

“Grandma’s Hands”

Things Fall Apart - The Roots

This album seemed to unfold like a play following dramatic structure. I got the feeling there’s an overall story there, although I wasn’t quick enough to really capture it. I want to listen again to understand the story better - it was downcast and moody, but it felt like a movie.

Brain Rating: 8.5

Taste Rating: 5

Jams

“The Next Movement”

“The Spark”

“Ain’t Sayin’ Nothin’ New”

Fine Line - Harry Styles

The 491st greatest album of all time contains bop after bop with more depth than I expected. I don’t have anything wise to say about it, but I actually found myself jamming hard to this album. Now I partially understand my sister’s Harry Styles tattoo that my mom hates so much. OK, not really, but at least I tried.

Brain Rating: 6

Taste Rating: 7

New Jams

“Golden”

“She”

“Canyon Moon”

Bridge Over Troubled Water - Simon and Garfunkel

The last album from Simon and Garfunkel - this is one of those albums that reminds me of those infomercials that would come on at 1 am selling CD’s with the greatest easy listening songs of the 70’s. At the time I liked to make fun of my mom for loving Simon and Garfunkel, but like many of the bands she likes, they are actually pretty fantastic when heard with adult ears. Of course, I already knew that long before my most recent listen to this album, but the things that still stick out to me now are the artful blend of genres and the super varying kinds of feelings this album evokes. Excellent.

Brain Rating: 8

Taste Rating: 7

Jams

“Bridge Over Troubled Water”

“The Boxer”

“Cecilia”

The Dark Side Of The Moon - Pink Floyd

This album made me realize a big reason I think I disliked some great music as a kid. I remember listening to this album on my old CD player and being too impatient to really give it due attention. But now I know why. I was listening with the cheapest pair of headphones I could get my little hands on. They probably had a frequency range of like negative numbers. This album and others suffered as a result.

I’ve obviously grown to love this album as I’ve grown up and gained access to proper headphones that don’t sound like a tinny loudspeaker in a middle school gym. But man I could have had such a more enlightened teen life if I’d just ditched the stolen airplane headphones earlier.

Brain rating: 9.5

Taste rating: 9

Jams

“”Us and Them”

“Time”

“Money”

Houses Of The Holy - Led Zeppelin

just another old favorite of mine with some songs that will never leave my brain. I’ll know every note of “The Rain Song” by heart even when I’m old and can’t remember my name. I’d probably be able to play the riff to “Over The Hills And Far Away” while having brain surgery. This is an all-timer for me and just gets me psyched every time I spin it.

Brain Rating: 8.5

Taste Rating 10

Jams

“The Rain Song”

“The Ocean”

“Over The Hills And Far Away”